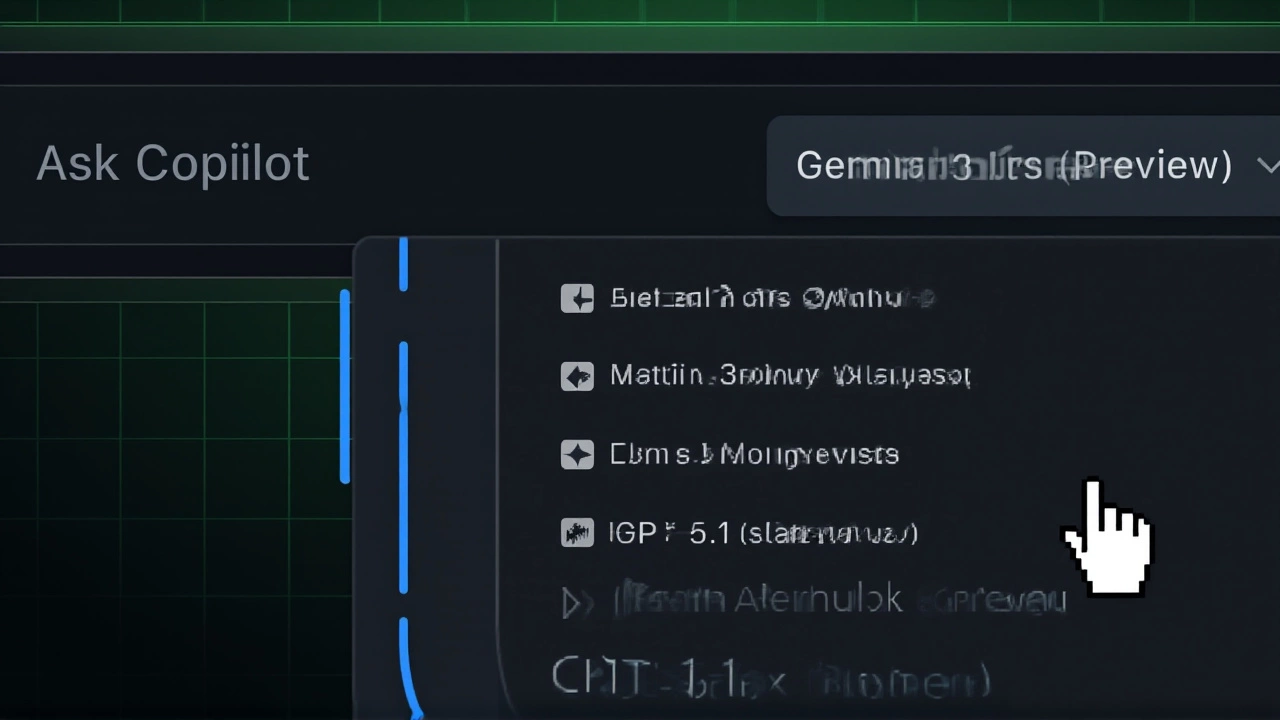

On November 18, 2025, Google quietly changed the game for developers and enterprise users alike, launching Gemini 3 Pro—its most powerful AI model yet—with a staggering 1 million token context window and seamless multimodal abilities across GitHub Copilot, Google Workspace, and third-party developer tools. The rollout, announced in three separate blog posts from GitHub, Google Workspace Updates, and the Google Cloud Blog, isn’t just an incremental upgrade. It’s a full-scale reimagining of how AI interacts with code, documents, images, and audio in real-world settings. And the numbers? They’re hard to ignore.

What Makes Gemini 3 Pro Different?

Most AI models today struggle with long-form context. Think of it like trying to read a 500-page novel while only remembering the last three paragraphs. Gemini 3 Pro doesn’t just remember the last few pages—it remembers the whole book, plus the footnotes, the index, and the author’s drafts. With a 1 million token input window and 64k token output capacity, it can process entire codebases, multi-chapter technical documentation, or hour-long multilingual meetings in a single prompt. That’s 20 times larger than its predecessor, Gemini 2.5 Pro.

The image capabilities are equally groundbreaking. The gemini-3-pro-image-preview variant doesn’t just generate pictures—it generates accurate ones. Using real-time data from Google Search, it can create visuals grounded in live stock prices, weather patterns, or even current event timelines. And for the first time, it renders sharp, legible text in diagrams and charts at native 4K resolution, then upscales them without blurring. No more fuzzy screenshots or unreadable equations.

Real-World Impact: Developers and Enterprises Are Already Seeing Results

It’s one thing to boast specs. It’s another when teams are actually using the tool and seeing measurable gains. Joe Binder, Vice President of Product at GitHub in San Francisco, shared early results: developers using Gemini 3 Pro in VS Code solved software challenges with 35% higher accuracy than with Gemini 2.5 Pro. "That’s the kind of potential that translates to developers solving real-world problems with more speed and effectiveness," he said.

Meanwhile, the JetBrains team—led by CEO Hadi Hariri—reported a 50%+ improvement in benchmark task completion. They’re now integrating the model into Junie and their AI Assistant, aiming to serve millions of coders globally.

And it’s not just about code. Rakuten, the Tokyo-based e-commerce giant, tested the model on messy, real-world data: transcribing 3-hour multilingual meetings with overlapping speakers. Result? Superior speaker identification and 50% better accuracy than previous models. They also pulled structured data from blurry, poorly scanned invoices and contracts—something older systems simply couldn’t handle.

Pricing and Access: Who Gets It, and How Much?

Google didn’t make this a free feature. The gemini-3-pro-preview model costs $2 per million tokens for input and $12 for output—if you’re under 200,000 tokens. But if you push past that? Rates jump to $4 and $18 respectively. That’s steep, but for enterprises running massive codebases or processing hundreds of documents daily, it’s a bargain compared to hiring teams to do the same manually.

The image variant? $2 per million text tokens, plus $0.134 per image output—with adjustments based on resolution. And yes, that means generating a 4K diagram with embedded text costs more than a simple icon.

Access is rolling out gradually across GitHub Copilot Pro, Pro+, Business, and Enterprise tiers. For Google Workspace users, it’s coming to Business Starter through Plus, Enterprise plans, Education Fundamentals through Plus, Nonprofits, and even Frontline plans. Admins control everything through the existing Generative AI settings in the Admin console—no new tools needed. Full rollout should wrap within 15 days.

Why This Matters Beyond the Tech Crowd

This isn’t just a developer tool. It’s a productivity multiplier for lawyers reviewing 100-page contracts, journalists analyzing hours of interview audio, medical researchers sifting through decades of clinical notes, and even small businesses trying to digitize old paper records. Gemini 3 Pro doesn’t just summarize—it understands. It connects dots across modalities. It sees a blurry photo of a receipt, recognizes the handwritten date, extracts the vendor name, and cross-references it with a known business database.

And while Google hasn’t shared details on its mixture-of-experts architecture, experts suspect this is the first time a model of this scale has been optimized for real-world messiness—not clean lab data. Overlapping voices? Blurry images? Handwritten notes? The model was trained on exactly those edge cases.

What’s Next?

Expect tighter integrations with Cursor—where Co-founder Sualeh Asif in New York City called it "noticeable improvement in frontend quality"—and possibly Figma, where Chief Design Officer Loredana Crisan in San Francisco gave strong, if unquoted, support.

One thing’s clear: the AI arms race just got a new benchmark. And this time, Google didn’t just raise the bar—it rebuilt the track.

Frequently Asked Questions

How does Gemini 3 Pro improve developer productivity compared to previous models?

Gemini 3 Pro increases developer productivity by offering a 1 million token context window, allowing it to analyze entire codebases in one go—something earlier models couldn’t handle. Early testing by GitHub showed a 35% improvement in code-solving accuracy over Gemini 2.5 Pro. JetBrains reported over 50% more benchmark tasks completed, and Cursor noted better frontend code generation. This means fewer back-and-forths, less manual context-switching, and faster iteration cycles.

Who can access Gemini 3 Pro right now, and how?

Access is currently limited to GitHub Copilot Pro, Pro+, Business, and Enterprise subscribers. For Google Workspace users, it’s rolling out across Business, Enterprise, Education, Nonprofit, and Frontline plans over 15 days. Administrators enable it through the existing Generative AI settings in the Google Workspace Admin console—no new licenses or tools required. Free users and basic-tier subscribers won’t get access until further notice.

What’s the pricing structure for Gemini 3 Pro?

Input costs $2 per million tokens for usage under 200,000 tokens, rising to $4 per million beyond that. Output is $12 per million under 200k, jumping to $18 after. The image variant charges $2 per million text tokens and $0.134 per image output, with higher costs for 4K resolution. For enterprises processing thousands of documents or hours of audio daily, the cost is offset by reduced labor and faster turnaround times.

Can Gemini 3 Pro handle multilingual and noisy audio data?

Yes. Rakuten tested it on 3-hour multilingual meetings with overlapping speakers and reported superior speaker identification and transcription accuracy—outperforming baseline models by over 50%. The model was trained on real-world audio challenges, including background noise, accented speech, and rapid switching between languages. This makes it uniquely suited for global teams, legal depositions, and customer service call analysis.

How does the image generation differ from previous AI tools?

Unlike older models that generate images from scratch, Gemini 3 Pro’s image variant uses Google Search grounding to pull live data—like current stock prices or weather forecasts—before generating visuals. It renders legible text in diagrams at native 4K, then upscales cleanly to 2K or 4K. This means charts, infographics, and technical schematics stay readable and accurate, not just visually appealing.

Is this rollout limited to enterprise users?

Initially, yes. Google is prioritizing enterprise, education, and nonprofit plans with paid tiers. While individual developers on GitHub Copilot Pro can use it now, free users and basic Workspace subscribers won’t have access yet. This suggests Google is testing scalability and enterprise value before considering broader consumer availability—likely to avoid overwhelming its infrastructure.